AI Technology Consulting

Technical Advisory for AI Adoption, AI Development & AI Strategy

With over 10 years of experience since the dawn of AI, our team handles everything from building GPU machines to constructing and operating our own GPU data center and developing AI products end-to-end. As technical advisors, we support your AI adoption, AI development, and AI technology strategy formulation. With deep expertise in machine learning fundamentals, mathematics, and statistics—and the hands-on ability to design and implement learning algorithms from scratch—our team can provide expert technology assessment. This makes us ideal for reviewing external vendor proposals or serving as a second opinion. We provide insights for technical research, architecture design reviews, vendor selection support, and technical documentation.

LLM/Generative AI

Technical Selection & Architecture Design Advisory

Expert advice on optimal technology selection and adoption strategy.

Commercial API vs open-source LLM, RAG architecture decisions, fine-tuning feasibility— we support your technical decision-making for LLM adoption. We provide materials for informed decisions: technical research reports, architecture reviews, and vendor proposal evaluations.

Advisory Areas

Commercial API vs Local LLM

- Cost comparison & estimation

- Security requirements analysis

- Latency & throughput evaluation

- Use-case specific recommendations

Open Source LLM Selection

- Llama/Mistral/Qwen comparison

- Performance evaluation

- License & usage terms analysis

- Model size vs performance tradeoffs

Fine-tuning Strategy

- LoRA/QLoRA applicability assessment

- Continued pre-training vs fine-tuning

- Data requirements & cost estimation

- Effectiveness measurement advice

RAG Design Review

- Architecture design review

- Vector DB selection advice

- Accuracy improvement proposals

- Vendor proposal technical evaluation

Consultations We Handle

- Considering LLM adoption but lack technical decision-making expertise

- Need third-party evaluation of vendor proposals

- Want to compare costs and benefits of commercial API vs local LLM

- Need to estimate fine-tuning effectiveness and costs upfront

- Need technical briefing materials for executive presentations

- Want GPU configuration and cost estimates for LLM training/inference

Our Expertise

Operating our own GPU clusters and conducting LLM training and inference daily, we can share the following specialized knowledge:

LLM Training Realities

We explain the differences between full-scratch training, continued pre-training, and fine-tuning, along with realistic estimates for GPU requirements, timeframes, and costs.

Understanding Scaling Laws

We cover optimal balance between model size and data volume based on Chinchilla scaling laws, MoE (Mixture of Experts) architecture mechanics, and latest technical trends.

GPU Selection & Cost Estimation

We provide practical information on A100/H100/L40S characteristics comparison, NVLink importance, and cloud GPU vs on-premise TCO analysis.

Value We Provide

Vendor-Neutral Evaluation

From a position independent of any specific vendor, we advise on optimal technology selection for your needs.

Operations-Based Expertise

From our experience operating LLM products on our own GPU clusters, we provide practical advice, not just theory.

Documentation & Meeting Support

We directly support decision-making through technical research reports, comparison documents, and participation in technical review meetings.

Related Resources

We publish technical articles about LLM/Generative AI on the Qualiteg Blog.

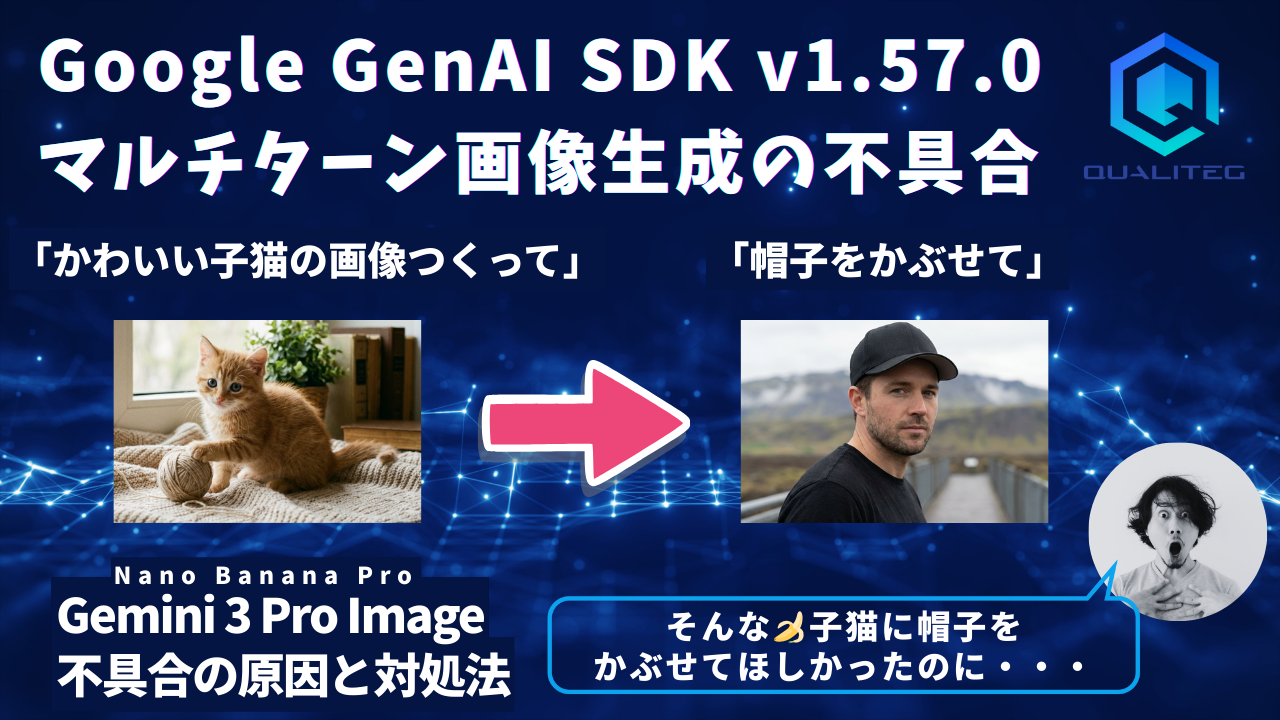

Google GenAI SDK マルチターン画像編集の問題と対処法

Sharing the issue and solution for unstable multi-turn image editing with Gemini 3 Pro Image streaming.

LLM学習の現実:GPU選びから学習コストまで徹底解説

Explaining the reality of LLM training with specific numbers on GPU requirements, duration, and costs.

Model Context Protocol完全実装ガイド 2025

Complete guide from MCP specification evolution to latest Streamable HTTP with full source code.

日本語対応 LLMランキング2025 ~ベンチマーク分析レポート~

Comprehensive analysis of Japanese LLMs based on Nejumi Leaderboard 4 benchmark data.

【解説】Tekken トークナイザーとは何か?

Explaining Mistral's new-generation Tekken tokenizer and its differences from traditional tokenizers.

日本語対応!Mistral Small v3 解説

Explaining the Japanese-capable small model that achieves 70B+ performance with only 24B parameters.

「Open Deep Research」技術解説

Deep dive into HuggingFace's Open Deep Research architecture and implementation.

Meta社が発表した最新の大規模言語モデル、Llama 3.1シリーズの紹介

Introducing the Llama 3.1 series available in 8B, 70B, and 405B sizes.

Mistral AI社の最新LLM「Mistral NeMo 12B」を徹底解説

Explaining the Apache2-licensed 12B parameter model's features and performance.

革新的なコード生成LLM "Codestral Mamba 7B" を試してみた

Hands-on report with the new code generation LLM using Mamba architecture.

ChatStream🄬でLlama-3-Elyza-JP-8B を動かす

Testing the Japanese LLM "Llama-3-Elyza-JP" 8B version said to outperform GPT-4.

AI Agents

Advisory for Coding Agents & Business Automation Agents

Accurately assess what AI agents "can do" and their "limitations."

AI agents, especially coding agents, are evolving rapidly. However, there is a significant gap between benchmark scores and real-world performance, making accurate technical understanding essential for adoption. Based on our hands-on experience testing 20+ tools, we advise on optimal agent selection and implementation strategy for your organization.

Advisory Domains

Coding Agents

- Claude Code / Codex CLI / Aider

- GitHub Copilot Agent

- Cursor / Windsurf / Cline

- Tool comparison & selection

Tool Use & MCP Design

- External API integration design

- MCP server implementation

- File operations & DB connections

- Tool selection optimization

Multi-Agent Systems

- Inter-agent coordination design

- CrewAI/AutoGen/LangGraph

- Role assignment & workflows

- Orchestration patterns

Risk & Limitation Assessment

- Context window constraints

- Session memory loss

- Benchmark vs real-world gaps

- Cost & ROI estimation

We Help With These Challenges

- Want to adopt coding agents for the dev team but unsure which tool is best

- Need comparative evaluation of Claude Code, Cursor, Copilot, and other tools

- Want feasibility assessment and limitation analysis for AI agent business automation

- Need technical validation of AI agent proposals from vendors

- Want to understand AI agent adoption risks (context limits, accuracy constraints, etc.)

- Need training on coding agent best practices for development teams

Our Expertise

Based on our daily use of coding agents and hands-on testing of 20+ tools, we can share the following specialized insights.

Coding Agent Comparative Analysis

Practical comparison of major tools including Claude Code, Codex CLI, Aider, Cursor, Windsurf, Cline, GitHub Copilot Agent, and Amazon Q Developer. Terminal-based vs IDE-integrated trade-offs, model switching capabilities, and pricing analysis.

Understanding Structural Limitations

Context window constraints (approximately 200K tokens, depleted after ~50 Tool Uses), session memory loss issues, and accuracy degradation in long-running tasks. We explain the fundamental limitations of current agent architectures.

Benchmark vs Production Reality

There's a significant gap between SWE-bench scores and actual development performance. We explain why this gap exists and what conditions are needed to achieve production results.

Value We Provide

Practice-Based Insights

Our team uses coding agents daily and has accumulated extensive hands-on testing experience, enabling advice based on real experience.

Realistic Expectation Setting

Without being swayed by vendor or media hype, we honestly communicate what AI agents "can realistically do" and "cannot yet do."

Implementation Strategy Design

We propose realistic adoption roadmaps considering team skill levels, project characteristics, and security requirements.

Related Resources

We publish technical articles about AI agents on the Qualiteg Blog.

コーディングエージェントの現状と未来への展望 【第2回】主要ツール比較と構造的課題

Detailed comparison of major tools and structural challenges including context window limitations and inter-session memory loss.

AIコーディングエージェント20選!現状と未来への展望 【第1回】全体像と基礎

A comprehensive introduction to 20+ AI coding tools, comparing commercial services and open source from a practical perspective.

ゼロトラスト時代のLLMセキュリティ完全ガイド:ガーディアンエージェントへの進化を見据えて

Explaining three transformations: Zero Trust, LLM security, and the evolution toward Guardian Agents in the AI Agent era.

LLM Infrastructure

From GPU Environment Design to Distributed Inference

Infrastructure that doesn't just "work" but "scales."

Production LLM operations require proper GPU selection, memory management, inference optimization, and load balancing. We support optimal infrastructure design and construction from single GPU servers to large-scale GPU clusters, tailored to your requirements.

Technical Domains

GPU Infrastructure Design

- GPU selection (H100/A100/L40S)

- Server configuration design

- Network design (NVLink/InfiniBand)

- Storage design

Inference Optimization

- Quantization (GPTQ/AWQ/GGUF)

- vLLM/TGI utilization

- Batch processing optimization

- KV cache management

Distributed Processing

- Tensor/Pipeline parallelism

- Multi-GPU inference

- Multi-node clusters

- Load balancing design

Cloud/On-Premise

- AWS/GCP/Azure GPU utilization

- On-premise GPU server setup

- Hybrid configurations

- Cost optimization

Past Support Examples

We have extensive experience from GPU cluster construction to inference environment optimization.

Consulting Firm

- GPU server/cluster configuration estimation support

- Optimal configuration proposals based on budget and requirements

Startup

- GPU server design support for high-load environments

- Optimization balancing inference performance and cost

System Vendor

- Internal local LLM environment setup support

- Inference infrastructure construction using vLLM

Qualiteg's Strengths

In-House GPU Infrastructure Operations

We operate our own GPU cluster with NVIDIA H100/A100, and can share insights gained from real-world operations directly.

Hardware to Software

We provide integrated support from hardware-level GPU selection and server configuration to inference engine settings like vLLM and TGI.

Cost Optimization Expertise

We can propose solutions considering TCO (Total Cost of Ownership), including appropriate use of cloud GPU, on-premise GPU, and inference APIs.

Related Resources

Explore our LLM Inference Infrastructure Provisioning course on the Qualiteg Blog.

The Reality of LLM Training: From GPU Selection to Cost Analysis

Real-world GPU requirements and costs for LLM training with examples from LLaMA 2 and DeepSeek-V3.

Is Your Code Running on CPU Instead of GPU? ~ONNX Runtime cuDNN Warning Fix~

How to resolve the "libcudnn.so.9" error when running GPU inference with ONNX Runtime.

LLM Inference Provisioning Part 5: From GPU Node Configuration to Load Testing

Covers GPU node configuration, load testing, trade-off considerations, and real server examples.

LLM Inference Provisioning Part 4: Selecting Inference Engines

Compares inference engines like vLLM and TGI, explaining selection criteria.

LLM Inference Provisioning Part 3: Estimating Model Inference Memory Consumption

Explains GPU memory consumption factors including model footprint and KV cache.

LLM Inference Provisioning Part 2: Estimating LLM Service Request Volume

Learn how to estimate expected request volume for calculating required GPU nodes.

LLM Inference Provisioning Part 1: Basic Concepts and Inference Speed

Explains the fundamental concepts and inference speed considerations for building LLM inference infrastructure.

Optimal GPU Server Capacity Calculation: Queuing Theory and Practical Models

Learn how to calculate maximum user support capacity for GPU servers using queuing theory.

2025 NVIDIA GPU Quick Search Tool

Search and filter NVIDIA GPUs by generation and specs: Blackwell, Hopper, Ada Lovelace, and more.

[ChatStream] GPU Server Configuration for Large LLM Inference

Video explanation of GPU server/cluster configuration for large LLM inference using Llama3-70B as example.

Speculative Decoding: Accelerating LLM Inference Speed

A technique to speed up inference by using a small model to predict ahead, reducing computation load on larger models.

LLM Security

Addressing LLM-Specific Vulnerabilities and Safe AI Operations

Balancing AI convenience with safety.

LLMs have unique security risks different from traditional software. We support practical countermeasures against LLM-specific vulnerabilities—prompt injection, information leakage, harmful outputs—and help establish safe AI system operations.

LLM-Audit

We develop and provide LLM-Audit, an LLM security assessment product. Leveraging the expertise gained from developing this product, we support strengthening your LLM system's security.

Vulnerabilities & Risks We Address

Prompt Injection

- Direct injection

- Indirect injection

- Jailbreak attacks

- System prompt leakage

Information Leakage

- Training data extraction

- PII (Personal Information) leakage

- Unintended confidential output

- Context leakage

Harmful Output

- Harmful content generation

- Bias/discriminatory output

- Misinformation/hallucination

- Copyright infringement risk

System Attacks

- DoS attacks (resource exhaustion)

- Model theft

- API key leakage

- Supply chain attacks

Support Process

Challenge Hearing

Understanding your LLM system architecture, use cases, and security concerns

Latest Trends Sharing

Sharing latest LLM security attack methods, industry trends, and best practices

LLM-Audit Implementation

Implementation and operation support using our in-house LLM-Audit tool for vulnerability assessment

Qualiteg's Strengths

Product Development Expertise

We have deep technical knowledge in LLM security accumulated through developing LLM-Audit.

Up-to-Date Attack Knowledge

We continuously track newly discovered attack methods and continuously update our assessment items.

Practical Countermeasure Proposals

Based on our experience actually operating LLM systems, we propose implementable countermeasures, not just theory.

Related Resources

Read technical articles on LLM security published on Qualiteg Blog.

企業セキュリティはなぜ複雑になったのか? 〜AD+Proxyの時代から現代のクラウド対応まで〜

Explaining the history of enterprise security evolution from firewall & proxy era to SASE/SSE, and its application to LLM security.

大企業のAIセキュリティを支える基盤技術 - Active Directory 第4回 プロキシサーバーと統合Windows認証

Explaining proxy server and Kerberos authentication integration for monitoring ChatGPT and Claude access.

大企業のAIセキュリティを支える基盤技術 - Active Directory 第3回 ドメイン参加

Explaining the domain join process for clients and servers, including the underlying mechanisms.

大企業のAIセキュリティを支える基盤技術 - Active Directory 第2回 ドメイン環境の構築

Detailed guide for building Active Directory domain environment for AI security testing.

Complete Guide to LLM Security in the Zero Trust Era

Explaining three transformations: Zero Trust, LLM security, and the evolution toward Guardian Agents in the AI Agent era.

大企業のAIセキュリティを支える基盤技術 - Active Directory 第1回 基本概念の理解

Explaining Active Directory fundamentals for integrating AI security solutions with enterprise environments.

AIエージェント時代の新たな番人「ガーディアンエージェント」とは?

Explaining Gartner's Guardian Agent concept and the new watchdog needed in the AI Agent era.

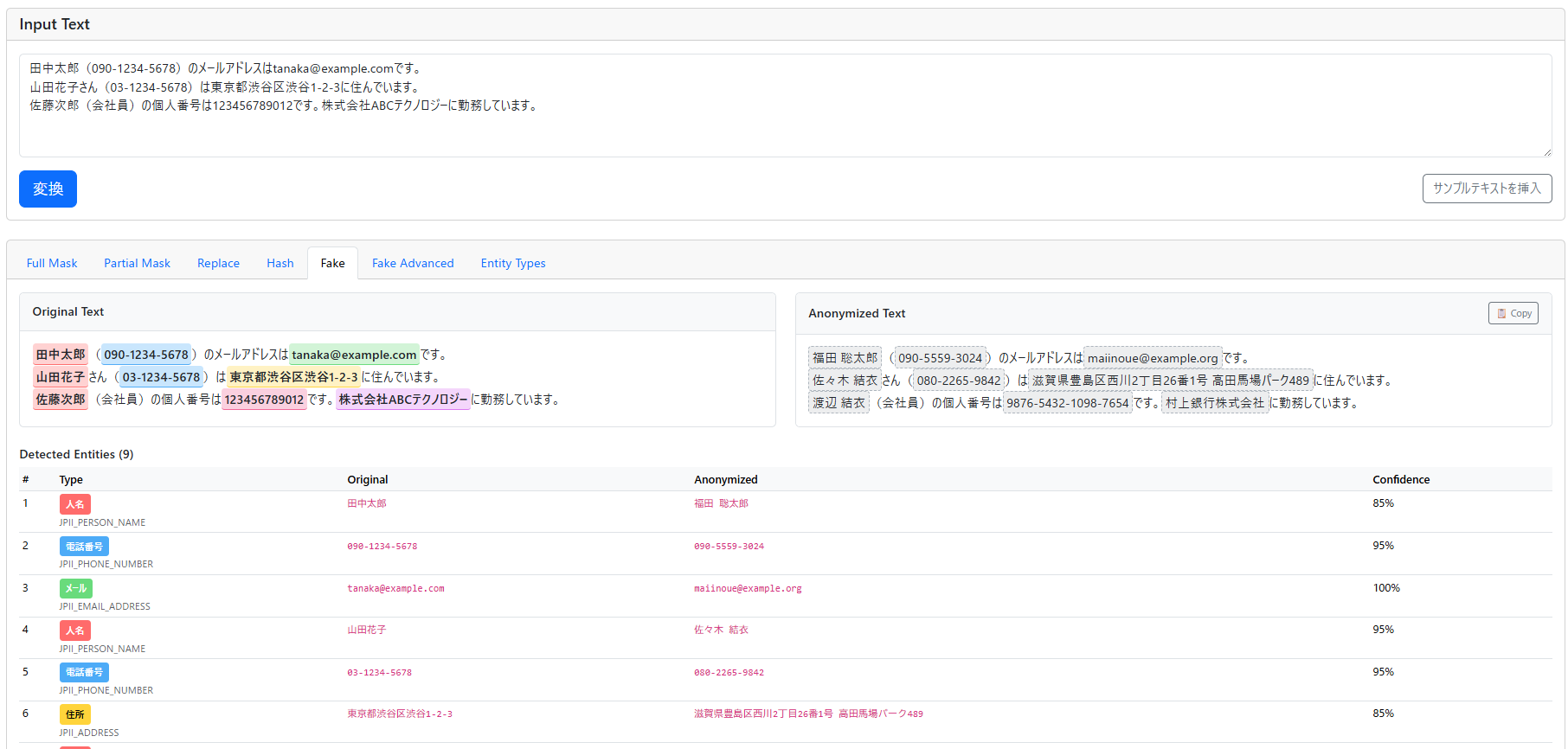

Staged PII Masking for LLM Applications

Explaining PII detection in files and staged masking processing by LLM-Audit PII Protector.

Enterprise Information Defense in the LLM Era: New Challenges in PII Security

Explaining the new definition of PII security and information defense strategies for enterprises in the generative AI era.

LLM-Audit: Frontline of LLM Attack and Defense

Introducing our LLM security solution "LLM-Audit" and explaining countermeasures for LLM-specific vulnerabilities.

LLM Security: How to Detect Hallucinations

Explaining the LYNX hallucination detection model for RAG and methods to verify LLM output faithfulness.

【LLMセキュリティ】Llama Guard:AI安全性の第一歩

Explaining Meta's Llama Guard and its safeguard features for LLM input/output.

【LLMセキュリティ】ゼロリソースブラックボックスでのハルシネーション検出

Explaining SelfCheckGPT, a zero-resource hallucination detection method without external databases.

AI Avatar/AI Human

AI Character Development Combining Voice AI and Image Generation

Giving AI a "face" and "voice."

By combining LLM dialogue capabilities with speech recognition, speech synthesis, and image generation technologies, we create more natural and approachable AI interfaces. We support applications across customer support, education, entertainment, and more.

Technical Domains

Speech Recognition AI

- Whisper utilization

- Real-time speech recognition

- Speaker identification

- Noise resilience enhancement

Speech Synthesis AI

- High-quality TTS

- Emotion/intonation control

- Voice Cloning

- Multilingual support

Image Generation/Avatar

- Stable Diffusion utilization

- Character generation

- Lip sync

- Expression/motion control

Integrated Systems

- Dialogue × Voice × Video integration

- Real-time processing

- Low-latency design

- Multi-platform support

Use Cases

- 24/7 AI customer support

- AI tutors for education and training

- AI guide characters for stores and facilities

- AI characters for entertainment

- Adding face and voice to internal AI assistants

Qualiteg's Strengths

Multimodal Integration

We have experience developing systems that combine multiple AI technologies—LLM, voice, and image.

Real-Time Processing Expertise

We achieve natural conversation experiences through low-latency pipeline design for speech recognition → LLM → speech synthesis.

GPU Infrastructure Utilization

Using our in-house GPU environment, we can quickly verify model performance and select optimal combinations.

Related Resources

Explore our technical articles on AI lip-sync technology published on the Qualiteg Blog.

AI Lip-Sync Part 4: LSTM Limitations and the Transition to Transformer

Exploring LSTM model limitations and the migration to higher-precision Transformer models.

AI Lip-Sync Part 3: Learning Mouth Shape Parameters from wav2vec Features

Building models to learn mouth shape parameters from wav2vec feature vectors.

AI Lip-Sync Part 2: AI-Based Drift Correction

Technical explanation of AI-powered drift correction to automatically synchronize audio and video.

AI Lip-Sync Part 1: Phonemes and wav2vec

Introduction to phoneme concepts and wav2vec utilization—the foundation of AI lip-sync technology.

NLP/Natural Language Processing

Japanese-Specialized Advanced Text Processing & PII Detection

Embracing the depth and complexity of the Japanese language.

Global NLP tools are primarily designed for English-speaking regions and often fail to adequately handle Japanese-specific complex writing systems and context-dependent semantics. We have a team of experts who have been researching natural language processing for many years, with a wide range of technologies from morphological analysis using established libraries (MeCab/Sudachi) and NER (spaCy/GiNZA/CRF) to the latest BERT and LLM, covering different use cases, precision requirements, and speed demands. Armed with our proprietary original corpus and deep understanding of Japanese cultural context, we have achieved productization and practical implementation as a PII detection engine. We deliver truly practical NLP solutions for Japanese businesses.

The Beauty and Complexity of Japanese — And Qualiteg's Approach

Addressing the complexity of Japanese requires a well-balanced combination of rule-based heuristic methods and machine learning/deep learning approaches. Our strength lies in deeply understanding both technologies and being able to select and integrate the optimal methods for each challenge.

A Rare Multi-Layered Writing System

Hiragana, Katakana, Kanji, Roman letters, and numbers combine organically to create rich expressiveness. A single company name can be written as "株式会社国際情報技術研究所", "KJK研究所", or "ケージェーケー研究所"— this flexibility is a strength of Japanese but also makes computer processing challenging.

Context Determines Meaning

"三沢から連絡がありました" (Misawa contacted us)—Is this a person's name? Company? Place name? In Japanese, this cannot be determined without context. In English, "Mr. Misawa" (person) and "Misawa City" (place) are clearly distinguished, but Japanese requires examining broader context.

Information from the Honorific System

"山田が来ました", "山田さんが来ました", "山田様がいらっしゃいました", "山田先生がお見えになりました"— the type of honorific helps determine a person's status and required masking level. Honorifics contain multi-layered information about relationships and social standing.

Technical Domains

PII Detection

- Japanese-specialized PII detection engine

- Honorific-driven name detection

- Full support for Japanese address formats

- Phone/email pattern detection

- Context-aware high-precision detection

Named Entity Recognition (NER)

- Person/organization/location identification

- Japanese context enhancer

- spaCy/GiNZA utilization

- CRF/BiLSTM-CRF models

- Transformer-based deep learning

Morphological Analysis

- MeCab/Sudachi/Janome utilization

- Custom dictionary construction

- POS-based context judgment

- Dependency parsing

- Technical terms & neologisms support

Text Masking & De-identification

- Staged PII masking

- Safe processing before LLM use

- Multiple file formats (PDF/Excel/PPT)

- Hidden information detection

- Reversible & irreversible masking

Processing Levels: Precision vs Speed Tradeoffs

In real business scenarios, the balance between speed and precision must be flexibly adjusted based on use case, purpose, and security requirements. We propose optimal processing levels across 5 tiers based on data importance.

Ultra-Fast Scan

10K+/secHigh-speed pattern matching with regex. Instantly detects clearly formatted information like phone numbers, email addresses, and credit card numbers.

Balanced Mode

1K+/secMorphological analysis engine + rule-based inference. Understands Japanese grammar structure with POS-based context judgment.

High-Precision NER

100+/secspaCy/GiNZA + CRF/BiLSTM-CRF. Advanced named entity recognition via machine learning with flexible judgment considering entire sentence structure.

Transformer Deep Learning

100/secRoBERTa/DeBERTa Japanese models. Deep understanding of entire document context, inferring long-range dependencies and implicit information.

LLM Integration

10/secLarge Language Model integration. Human-level language understanding that comprehends abbreviations, jargon, and implicit references, proposing appropriate masking.

Consultations We Handle

- Global tools have insufficient Japanese detection accuracy, requiring additional development costs

- Need to safely remove personal information before inputting internal data to LLMs

- Want to automatically extract and mask specific information from contracts and meeting minutes

- Need high-precision Japanese text classification, summarization, and sentiment analysis

- Want to auto-classify and route customer support inquiries

- Need accurate extraction of names, organizations, and product names from internal documents

- Need PII detection that handles Japan-specific address formats (Kyoto street names, etc.)

Our Expertise

With deep expertise in Japanese NLP technology and hands-on experience developing and operating PII detection/masking products, we can share the following specialized knowledge.

Japanese-Specialized Detection Logic

Honorific-driven detection (identifying names using "様" or "さん" as cues), hierarchical pattern matching for address detection, and other techniques leveraging Japanese cultural characteristics.

Multi-Layer Filtering Technology

Morphological filtering, statistical filtering (surname/given name database matching), contextual filtering, and exclusion list application—composite approaches that improve precision.

Optimization Technology Application

Model size reduction through quantization, improved GPU efficiency via dynamic batching, lightweight model creation through knowledge distillation—achieving "high precision yet lightweight."

Qualiteg's Strengths

Years of Research & Expert Team

Our team includes specialists who have been researching NLP for many years. From morphological analysis and NER using established libraries to the latest Transformer/LLM technologies, we understand the evolution and propose optimal solutions.

Original Corpus & Productization

We possess proprietary Japanese corpora and have achieved productization as a PII detection engine. We have not just theory, but implementation capabilities proven in production environments.

Heuristics × Machine Learning Fusion

Japanese complexity requires both meticulous rule-based approaches and deep learning generalization. We deeply understand both and optimally combine them for each challenge.

Full-Stack Technology Coverage

From morphological analysis using established libraries (MeCab/Sudachi), NER (spaCy/GiNZA/CRF), to BERT/RoBERTa/DeBERTa and LLM—we provide comprehensive technologies matching your precision and speed requirements.

Deep Understanding of Japanese

We develop technology with deep understanding of Japanese cultural background, business practices, and linguistic structure. We achieve precision that sets us apart from global tools that merely "support Japanese."

Flexible Level Design

We propose optimal approaches from 5 processing levels based on use case, budget, and security requirements—preventing cost increases from excessive precision pursuit.

LLM-Audit

"LLM-Audit" is a comprehensive security solution for auditing enterprise LLM usage. It enables bidirectional auditing—monitoring both outbound (employee to LLM) and inbound (LLM to employee) communications. With protection against prompt injection, jailbreak attack defense, and harmful content detection/blocking, it strengthens security and compliance for enterprise AI adoption.

Qualiteg-PII-Detector

"Qualiteg-PII-Detector" is our Japanese-specialized PII detection engine integrated into LLM-Audit. It condenses years of our NLP technology expertise to enable high-precision personal information detection and masking before LLM input. It also supports detection of "hidden PII" in various file formats including PowerPoint, Excel, PDF, and images. This productized PII detection engine is proof of our Japanese NLP technical capabilities.

AI-Driven Software Development Transformation

Integrating AI across the development lifecycle to boost productivity and quality

Integrate AI throughout the development process to achieve sustainable productivity gains.

Software development is facing a structural crisis. 23-42% of development time is wasted on technical debt, 37% of projects fail due to unclear requirements, and 87% of CTOs recognize technical debt as the biggest obstacle to innovation. This service integrates AI throughout the development lifecycle, supporting transformation across the entire process—not just point optimizations.

Structural Challenges in Software Development

The software development market is projected to reach $570 billion in 2025 and exceed $1 trillion by 2030. However, behind this rapid growth, development teams face serious challenges.

Common Challenge Patterns

Technical Debt Accumulation

- More time fixing existing code than building new features

- Accumulation of "quick fix" code becomes future burden

- Refactoring constantly postponed

- $306K annual cost per million lines of code

Requirement Ambiguity & Rework

- "This doesn't match requirements" discovered late in development

- Large number of defects found during testing phase

- Misalignment between stakeholders

- Late-stage fixes cost 10-100x more

Documentation Gaps & Knowledge Silos

- "Code exists but no specification documents"

- Only specific engineers can maintain certain code

- New members take months to onboard

- Lack of common language for international collaboration

Unclear Change Impact

- Even small changes require full system testing

- Legacy code nobody wants to touch

- Long testing periods before each release

- 15-25% of dev time spent on impact analysis

Solution Approach Through AI Integration

Traditional AI adoption has been limited to optimizing specific tasks like code completion. However, true transformation requires integrating AI across the entire development lifecycle.

Traditional Approach

- Automation of specific tasks

- Human-led throughout

- Siloed tools

- 10-20% efficiency gains

Transformed Approach

- Integration across lifecycle

- Human-AI collaboration

- Connected data & context

- 30-50%+ productivity improvement

Staged Maturity Model

Rapid transformation carries high risk—we recommend a phased approach.

AI-Assisted

Efficiency gains on individual tasks like code completion and documentation. Developers lead, AI supports.

AI-Augmented

AI utilization spanning multiple phases. AI proposes, humans decide and approve.

AI-Driven

AI supports entire lifecycle. AI drafts and executes, humans supervise and ensure quality.

AI Utilization Across Development Lifecycle Phases

Each phase of software development has unique challenges. By appropriately leveraging AI, these challenges can be addressed to improve overall project success rates. Below are specific AI applications for each phase.

Planning & Requirements Phase

The requirements definition phase determines project success or failure. Ambiguity and oversights here cause rework and budget overruns in later phases. AI helps identify contradictions and ambiguities that humans might miss, strengthening the project foundation.

Design & Architecture Phase

The design phase defines the system skeleton, requiring technology selection and architecture decisions. Documentation consumes significant time, and experienced architects' knowledge tends to become siloed. AI enables design work efficiency and knowledge sharing.

Implementation & Coding Phase

The coding phase where developers spend most of their time. AI coding assistants have seen the most adoption here, but extending beyond simple code completion to review support and refactoring suggestions yields greater benefits.

Testing & Quality Assurance Phase

The testing phase that ensures quality often becomes a bottleneck. AI support for test case design, test code implementation, and debugging enables efficient testing without compromising quality.

Deployment & Operations Phase

The phase handling production releases and stable operations. AI provides powerful support to resolve "Is this change really safe?" concerns and enable rapid incident response.

Specific Use Cases

To help visualize AI's effectiveness, here are common challenges and how AI can solve them. These are scenarios frequently raised in client consultations.

Legacy Code Visualization & Auto-Documentation

"The person who built this system has left, and nobody understands the full picture." "It took a month to explain the system to a new vendor." "The offshore team spent weeks just understanding the code"—the longer systems run, the more common these problems become. Documentation is outdated and doesn't match reality, and few people can read the code. Enormous time is spent "just understanding" each time new features or maintenance is needed.

Our AI agents analyze the entire codebase to create documentation organizing module structure, processing flows, and data flows. They systematically compile explanations like "What does this class do?" and "What's this function's role?" to quickly produce "System Overview" for new team members and "Module Reference" for maintainers. This converts siloed knowledge into accessible explicit knowledge.

Requirements-Test Traceability Automation

"Where are the test cases for this feature again?" "Requirements changed, but which tests need updating?" "Tests pass, but are we really covering these requirements?"—requirements documents and test cases are managed separately, making their relationship unclear. Result: unclear impact of requirement changes, releasing with test gap risks.

Our AI agents create traceability matrices organizing relationships between requirements and test cases. They visualize coverage by listing "Which tests cover this requirement?" and "Which requirements lack test coverage?" and suggest additional test perspectives for gaps. This transitions from "sort of testing" to evidence-based quality assurance.

Technical Debt Visualization & Executive Communication

Executives ask "Why is new feature development so slow?" Requests for "refactoring time" get blocked with "Will that increase revenue?" Development teams know technical debt is accumulating but lack words to explain it to business stakeholders. Result: debt is ignored, development velocity continues declining—a vicious cycle.

Our AI agents analyze the codebase to create technical debt status reports. They identify duplicate code, high-complexity functions, and legacy library dependencies, organizing improvement priorities. They also create executive reports translating findings into business impact like "Continued neglect will increase effort" and "This improvement will boost development speed by X%." This helps secure refactoring budgets.

Automatic Change Impact Identification

"Changed just one line, but told we need full system testing." "Nobody wants to touch this code because who knows what will break." "Two-week testing period needed before each release, slowing release cycles"—unclear change impacts cause excessive caution and slower development. Or, misjudged impact has caused production incidents.

Our AI agents analyze codebase dependencies to create documentation of inter-module call relationships. Understanding "What's impacted if this function changes?" and "Which tests to verify?" before changes enables evidence-based efficient test planning. This converts implicit knowledge from veterans' heads into explicit knowledge accessible to everyone.

Code Review Efficiency & Quality Standardization

Pull requests submitted but reviewers too busy for timely reviews. Queued reviews delay merges, disrupting development rhythm. Review quality varies by reviewer—veterans catch details while others do surface checks. Security vulnerabilities have slipped through to release.

We implement AI agent-powered review support. When pull requests are created, AI performs first-pass review to automatically detect potential bugs, security concerns, and coding standard inconsistencies. Human reviewers focus on design decisions and business logic validity that only humans can judge. This standardizes review quality while reducing wait times.

Implementation Approach

When introducing AI to development processes, attempting full-scale deployment all at once often leads to confusion and disappointing results. We recommend a phased approach. First accurately assess current state, accumulate small wins, then gradually expand scope. This approach ultimately delivers the most reliable results.

Assessment

2-4 weeksCurrent state analysis, challenge quantification, prioritization

Strategy Development

4-6 weeksTarget design, tool selection, roadmap creation

Pilot Implementation

2-4 monthsLimited scope AI implementation, effectiveness verification

Rollout & Adoption

6-12 monthsScope expansion, process adoption, continuous improvement

Expected Effects (Based on Industry Research)

Qualitative Effects

- Shift engineers to creative work: Liberation from mundane tasks

- Eliminate knowledge silos: Knowledge becomes organizational asset

- Improved developer experience: Healthy development environment not buried in technical debt

- Enhanced hiring competitiveness: Showcase cutting-edge development environment

Why Qualiteg

Large-Scale Development & International Collaboration Experience

Qualiteg members have years of experience in large enterprise system development and international collaboration projects. Teams of tens to hundreds of developers, multi-site organizations, coordination across time zones—we've experienced many complex projects. This enables us to accurately estimate AI implementation impacts and design achievable transformation plans.

Hands-On AI Agent Experience

We actively use GitHub Copilot, Cursor, Claude Code, Devin, and various code generation AI agents in our daily commercial software development—actually writing production code. We know which tools work best in which situations, where the pitfalls are, and how to operate for adoption. We provide living knowledge from our own practice, not theoretical understanding.

Practical Manufacturing & System Development Knowledge

We have particularly deep experience in embedded software development for manufacturing and enterprise system development. Quality-demanding manufacturing development processes, enterprise environments requiring legacy system integration—we've accumulated specific know-how on how to leverage AI in these contexts and what to watch out for. Not simplistic "AI will solve it" proposals, but realistic implementation plans considering on-the-ground constraints.

AI × High-Quality Software Development Balance

AI-generated code is convenient, but without quality management it risks producing security holes and unmaintainable code. Qualiteg pursues both development efficiency through AI and high-quality software. From AI-generated code review processes to test automation integration and operational rules that don't increase technical debt—we provide end-to-end support for systems that protect quality, not just efficiency.

Keys to Success

Technical

- Phased implementation: Start small and learn, don't deploy everything at once

- Tool integration: Connect siloed tools to enable data and context flow

- Quality gates: Always build in AI output verification processes

- Security consideration: Thorough security review of AI-generated code

Organizational

- Executive commitment: Transformation requires investment and time

- Frontline engagement: Both top-down and bottom-up approaches

- Role redefinition: Clarify human roles in AI collaboration

- Skill shift support: Training for evolving engineer roles

Operational

- Continuous measurement: Regular KPI monitoring

- Feedback loops: Improvements reflecting frontline input

- Knowledge accumulation: Organizational learning from success and failure patterns

Our Approach to AI Software Development

We use AI coding tools in our daily development

At Qualiteg, we routinely use various AI coding tools including Claude Code, Cursor, Windsurf, Aider, and Cline in our own product development. We use multiple tools—including internally developed ones—selected for specific purposes in actual software development.

Through this practice, we've developed an intuitive understanding of each tool's strengths and weaknesses, as well as current technical limitations.

We tackle technical challenges head-on

While AI coding tools have great potential, hands-on production use reveals challenges.

- Context overflow in long sessions

- Context not carrying over between sessions

- Gap between benchmark performance and real codebase performance

We analyze and publish these structural challenges on our technical blog, accumulating knowledge on "how to make these tools work in production."

We've witnessed software development evolution

Our team includes members with over 35 years of software development experience. We've witnessed on the ground what worked and what didn't across technology trends like SOA, microservices, and cloud.

From that perspective, the current AI-driven development support evolution feels qualitatively different from past transformations—working solutions are emerging one after another.

We share grounded, realistic insights

We don't want to overestimate or underestimate AI tool capabilities.

"Here's what works, here's what's difficult," "This tool suits this purpose," "Here's how we handle this challenge"—we aim to share these grounded insights through our consulting.

Reference Data & Sources

CONTACT

Contact Us

For questions or consultations about AI Technology Consulting,

please feel free to contact us.